Making The Case for Value Stream Analysis

/in Analysis, Uncategorized /by Jerry TimpsonValue Stream Analysis or Straight to Implementation? That’s the question.

Complex operations problems are never solved by picking around the edges. Take a deeper view and do the examination that actually gives you the results you need.

Process Problems and Business Issues

Most process improvement techniques available today include some form of evaluation or assessment to baseline performance. This is good but it doesn’t replace analysis. Techniques like Rapid Improvement Events, workshops, A3s and even six-sigma’s DMAIC are mostly aimed at micro-, or best case, macro-processes. As such, they’re fantastic for fixing small problems using bottom-up methods and countermeasures, but they aren’t going to impact extensive value streams. Consequently, when these improvement techniques are applied as core practices to solve more complex, overarching business issues, the results often fall short of what actually could have been achieved.

People, geographies and data – important considerations for analysis

Value Stream Analysis – the examination of causes, effects and solutions – is a better starting point for macro and mega business issues. Typical targets include things like supply chain, sales and operations planning, consolidation, expansion, order-to-cash. Here, cross-functional and often tangled value streams are thorny and resilient. Addressing issues like these yields far greater results. Yet, these processes that are the fabric of the enterprise are often avoided to instead work on the pockets inside of them. This is because the modern organization promotes functional silos and when the inevitable push back comes, it’s simply easier to move on to something that can be more narrowly accomplished without attempting cross-functional cooperation.

Business Performance Fixes | Two Approaches

There are two ways to move forward: Direct to Implementation or Value Stream Analysis then Implementation. With direct to implementation, after some process evaluations or organizational surveys have been done, the decision to proceed is fueled largely by instinct and intuition; capacities proven to be seriously flawed in human beings. Conversely, with analysis, instincts and emotion are continually challenged with facts and data because change management is included in the analysis. Both ways can work, but be aware of the decisions you’re making when you go one way or the other.

Anyone tasked with improving business performance needs to manage risks and expectations. So it’s good to state the expected results at the start. With a narrow focus, there are fewer expectations and less risk – it feels safer. If goals are bigger and bolder, then doing some more thoughtful analysis and implementation design is essential. In this, the biggest problem to be solved isn’t the technical description about how results will be achieved – this is straightforward. Rather, gaining consensus about the path ahead is the most important outcome.

Direct to Implementation

This happens frequently. Sometimes process improvement itself is the objective. Here, the idea is to conduct a series of workshops, value stream mapping, training and such. Often, we’re told that for smaller direct to implementation projects, the business case isn’t important. We have never, no not even once, found this to be true. Business results are always important and inevitably someone is going to ask for them. Be ready.

Direct to implementation requires a lot of faith that process improvement will roll quickly to improve business performance. But, compared to what? Here, pay special attention to the starting conditions – the baseline. This makes measuring any improvement that follows a little easier. For example, if you’re working on solving a quality problem, be sure to understand the current level of defects and their cost, what reducing them would be worth and how any improvement becomes obvious and visible.

If direct to implementation is the only option on the table, then it should follow a specific pattern. If any of these steps are missed – particularly the steering team, there will be trouble.

- Steering Team formed and actively engaged

- Target Ideas generated via interviews and team working sessions

- Simple, prioritized opportunity list from impact-difficulty session with baseline performance data provided by the target areas

- Metrics identified and documented. We call this the metrics dashboard. It’s a baseline measurement of key performance indicators

- A plan that connects implementation activities with target opportunities

- Implementation with a sequence of activities intended to affect the performance baseline

- Monitoring and adjustment of the performance dashboard throughout the project – and beyond

Value Stream Analysis and Implementation Design

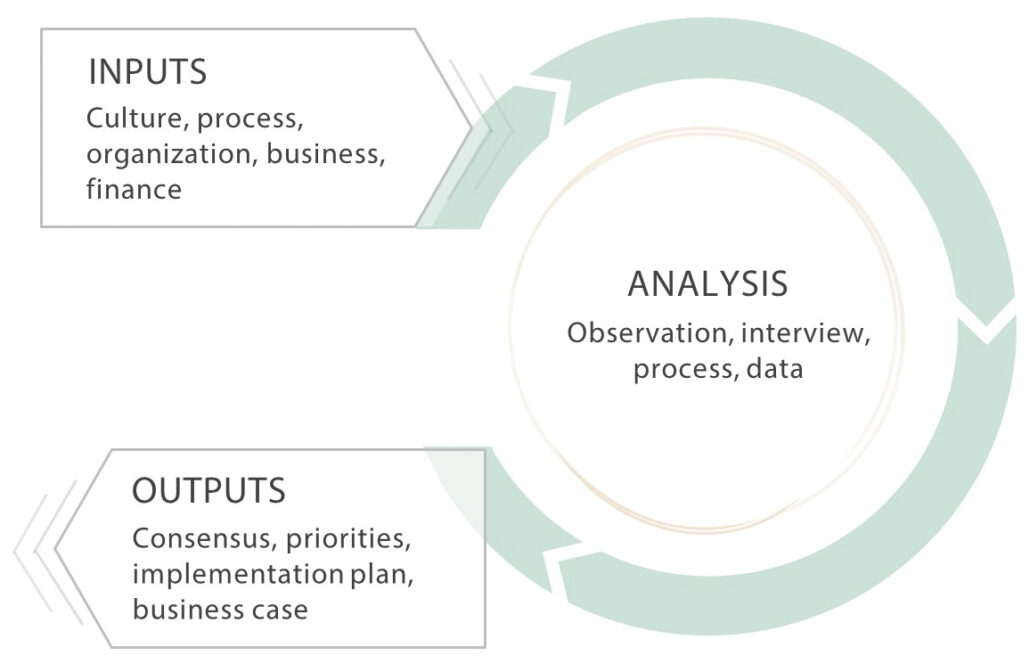

Analysis and Implementation Design Cycle: functional, operational, behavioral and financial inputs deliver a doable implementation roadmap

If you have the option, or maybe more importantly, if your issues are big enough that they warrant an up-front understanding of specific results and, you suspect you’ll encounter functional boundaries with numerous stakeholders then; start with an analysis. This is a highly accelerated mini-project. The deliverables are informed clarity, organizational alignment and mobilization, a time-based action plan and a business case. It follows these basic steps:

- Governance – Steering Team formed that lasts through the project (and beyond)

- Targets and opportunities developed via interviews and working sessions

- Solutions attached to business performance objectives and metrics

- Everything tested with data and analysis — including financials

- Direct observation of measured work processes

- Process decomposition (mapping, measurement, and lots of other analytics) – to connect specific improvements to business performance

- Final report and decision for a go-ahead to implementation

This is a cycle with numerous inputs validated against data and consensus. As new information emerges, it’s evaluated again, and again, until well-informed, well understood and potentially big decisions can be made.

The Steering Team meets every week for review, discussion and re-direction as necessary. This keeps stakeholders actively engaged and informed throughout the entire process — the surest way to enable consensus.

With an Analysis you get:

- Less ambiguity and an up-and-running governance structure (the Steering Team) to deal with any obstacles and barriers to progress that arise

- Strong consensus across the organization — especially with the leaders who must champion it, and,

- A validated business case; the reason for doing any of this in the first place. A business case is an “if-then” statement: “If we do these things, then we will get these results in this time.”

Either Way, Make a Plan

You might be thinking: What’s the difference? There’s a business case – or at least a result – for both. Keep in mind:

With Direct to Implementation, results are going to be less strategically chosen and less impactful. Cross-functional boundaries are harder to breach and the business case is lagging because it is stacked up during the implementation work. There is more inherent risk because of the ambiguity that persists with the “We’ll figure it out as we go,” trajectory. Without the structure prescribed from an analysis you’ll need to watch for waning resources and organizational focus.

With Value Stream Analysis then Implementation, results bear greater fruit with higher confidence. The business case is predictive and validated within the project work. The team-building, fact-finding and planning that are part of analysis makes results from implementation more prone to happen because expectations and the steps required to achieve them are completely, explicitly and thoroughly vetted. In other words, less risk for all stakeholders.

Sometimes you have no choice, the only way to start fixing things is to start small and work within a confined process, organization or functional boundary. If this is your best option for taking action, do it! On the other hand, if you have the ability dig into the more complex and interesting problems of extended value streams, be bold and begin with an analysis.

It’s ok to be bold sometimes.

If you want any help on filling in any of the details beyond a 1000-word blog post, you know where to find us.

5 Critical Success Factors for Evaluating Operational Performance

/in Analysis, Tools and Methods /by Jerry TimpsonRapid Performance Evaluation

In this post, we outline 5 critical success factors for evaluating operational performance. This is an approach we’ve developed over years of working with clients who want a solid portfolio of improvement projects that are connected to metrics important to the organization.

—

“Ready, fire, aim.”

“If you don’t know where you’re going, any road will get you there.”

“Shoot from the hip.”

These are not sayings you want to hear when you’re trying to set direction and plan for operational improvements. Yet, with poor planning, this is often how situations are described in hindsight. Even if deeper analysis is required, an efficient and effective gap assessment is an invaluable first step.

The best results come when you engage the organization from the start and use comparative benchmarks. The five elements described here, enable open discussion about operations and performance and are the starting point for an effective plan.

A good evaluation process has certain attributes. It:

- Leverages internal experts and external viewpoints

- Incorporates operational realities of the front line along with leadership priorities and,

- Gives everyone a way to make an objective assessment of the gap between the environment that exists and a standard definition of “what good looks like.”

At a site or value stream level, when done well, reliable results can be achieved within a few days.

5 Critical Success Factors for Evaluating Operational Performance

- Confirm the top-level performance metrics for the location being evaluated

- Use a template that provides performance level descriptions for key elements of the operation

- Engage the organization from the start

- Combine objective ratings with subjective views (interviews)

- Prioritize opportunities and describe an implementation approach

1. Confirm the top-level performance metrics

For any operation there are 10 or so metrics that tell the story. For example: inventory, quality, productivity, turnover (people), etc. Vast amount of data does not always translate into usable information. Identify and confirm the critical few operational metrics and then use them as a guide for where to look more closely during the evaluation. This becomes the baseline. From it, progress will be obvious as improvements are made. Later on, more information and more analysis may be required, but for your initial look, keep it simple.

2. Use a Template

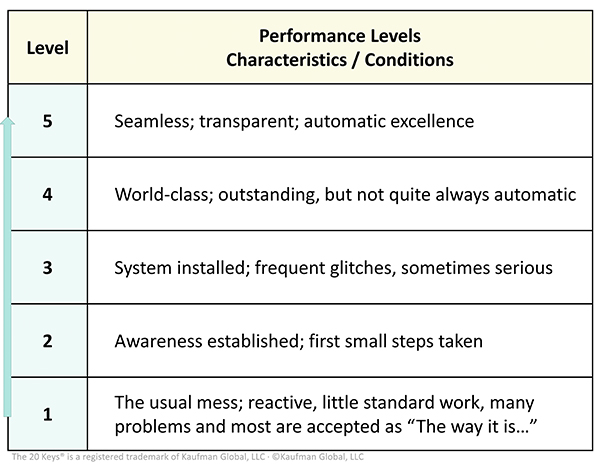

The best way to understand the gap is to use a template that describes different levels of performance for various functions. The level descriptions are based on an intuitive general definition of performance at each level. Shown here:

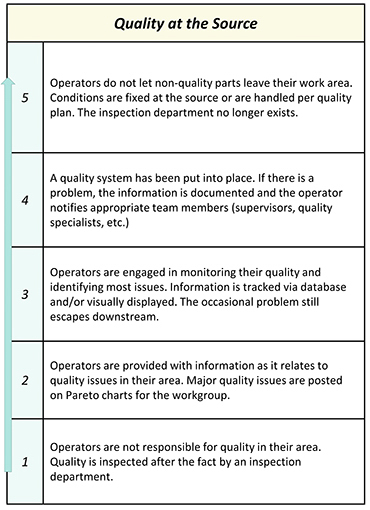

From this foundation, more detailed descriptions can be built for multiple areas of concern. We typically apply 20 Keys for such an evaluation. An Key example (quality) is shown here.

For more examples in several industries and functional areas, go here: Performance Key Examples for manufacturing, oilfield and mining, supply chain.

3. Engage the Organization from the Start

Those working inside the process – at the Gemba, know the most about what is really going on so get them involved from the start. They use the template that describes performance levels as a guide for rating each element. The results show areas of opportunity.

At the same time, the leadership team and functional heads understand areas of concern for the top-level metrics. By interviewing these individuals, patterns emerge. The strengths and opportunities across the organization start to become apparent.

By engaging the organization broadly, everyone owns the answer and the plan. You get better inputs and ensure greater success when it comes to implementing changes in the name of better operational performance.

4. Combine objective ratings with subjective views

A rating system gives the organization a way to establish a baseline, clarify gaps and then measure success. As improvements proceed, better ratings show tangible evidence of the gaps closing. We use a series of three assessment tools that provide different ratings:

- Interviews – These subjective opinions matter. With a standard set of questions, priorities emerge. And we can identify alignment issues that need to be reconciled.

- Vital Systems – Using the same 1-5 rating system levels noted above, we evaluate 10 vital systems including: customer focus, supply chain, engagement, etc. Relevant vital systems vary according to industry type. Each system is described in a way that identifies its critical elements. Vital systems evaluation provides a big-picture view of the operation.

- 20 Keys® – The 20 Keys does a drill-down into the details. They provide guidance on specific improvement activities and exactly what must be done to get to the next level of performance.

5. Prioritize Opportunities and Describe an Implementation Approach

Once the evaluation is complete and the gaps described, the question becomes, “What next?” This question must be answered at least at a high-level. An evaluation that takes less than a week won’t yield a detailed implementation plan and business case, but it will answer questions such as: top operational priorities, engagement issues, leadership alignment and recommended next steps.

Internal and External Views

The internal team knows the most about what is really happening inside the operation. However, even with a good template that has standard definitions, they still have a subjective view. A level of objectivity and broad experience can be extremely beneficial. This usually comes from the outside. A good evaluation balances these two views and enables collaboration between them.

Conclusion

When done well, an evaluation of operational performance is a fantastic starting point for real change and improvement. Follow the 5 success factors described here and you’ll be miles ahead in your efforts.

====

Kaufman Global conducts Rapid Performance Evaluations for clients everywhere. Contact us to learn more about this powerful, inclusive, template-designed method for reading your operations fast.

Learn more about how we think about the topic of Analysis as it relates to our service offerings, click here.

Or, see our post on analyzing extended value streams: Making the Case for Analysis.